Blog One

01. Road to Research

01

Developing My Research Question

Going into this class, I knew for sure that I wanted to research something regarding artificial intelligence. After looking into it, I learned that one of the biggest applications of artificial intelligence was in the criminal justice system. There were two approaches I could have gone with: artificial intelligence pretrial risk assessments or artificial intelligence facial recognition programs. However, a sub-goal of mine was to make my research specific to Colorado, and since Colorado didn’t use an artificial intelligence version of pretrial risk assessments, I went with the facial recognition programs. I eventually found that Lumen was the AI program that Colorado law enforcement uses, but I also found out that most of the agencies do not mention their facial recognition use anywhere online. Even though this was something I was interested in, I could only find released anonymous success stories regarding the Colorado use of Lumen, so I decided to narrow my research project by looking at demographic bias in the program instead. I did a similar project in AP Seminar, so I wanted to approach this topic through a more technology-based lens, in which I found that there were many cases where people of color and women were wrongfully identified in the facial recognition algorithms. I found a database of facial recognition algorithms studied by NIST, where they look at the accuracy of testing an “imposter” with an “enrollee” based on age, sex, and ethnicity. After finding this database, I quickly identified the facial recognition service (FRS) that Colorado uses, rank_one, and found seven different versions of that program, ranging from 2019 to 2023. Therefore, after looking at how different the data was in each version, I finalized my research question.

02

Background

Lumen FRS is a facial recognition service that helps identify potential suspects for police departments by comparing an uploaded image to a database of faces provided by the Colorado Information Sharing Consortium. This program is used as a method to find potential suspects in criminal investigations, however, it does not make decisions on whether the image is a match to the database, as it just ranks potential matches for human review. The Lumen results can also not be the sole basis to establish probable cause. More than 80 Colorado agencies have paid for the Lumen program, with only a few making public announcements to ensure transparency. The FRS (facial recognition service) within the Lumen platform is provided by the Denver-based, Rank One Computing Corporation’s (ROC) SDK version 2.2.1 algorithm software for Colorado law enforcement.

However, there is an underlying issue of demographic bias in these facial recognition services. Just a month ago, Randal Quran Reid, a black man, was wrongfully arrested and held in jail for a week because the officers of Jefferson Parish Sheriff's Office used facial recognition technology to identify Reid as a suspect for theft. This is just one of many cases in which facial recognition flaws have led to wrongful jail time, especially impacting people of color, women, etc. With the increase in the implementation of facial recognition technology in the criminal justice system, we need to ensure that we are working towards reducing the false positive error rate (the rate the program inaccurately mistakes someone for another person) for minorities.

03

Methodology

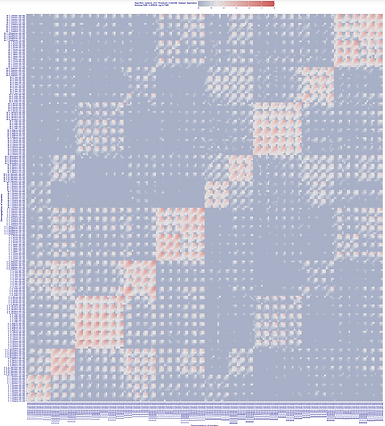

For each of the 7 different program versions of rank_one, I will access the data comparing two high-quality frontal portrait images of two people, controlling the sex, age group, and region of birth. The data for each version should look similar to Figure 1. In this chart, there are varying sex/ethnicity/age combinations (ex: Male, Japan, 12-20), compared to sex/ethnicity/age combination (Male, Korea, 20-35). Each mix of combinations will produce a color, which can be compared to the scale (Figure 2) to get a numerical number from -6 to 0. I will do this by separating the scale into 0.01 intervals and will use a color picker tool to match the color to the scale and get its resulting numerical value. When you get the numerical value for the boxes, you need to convert it logarithmically (= 10^value). The small number that you get as a result is the FPMR (false positive match rate). For example, Nigerian women aged 65 and over compared to other Nigerian women 65 and over have a value of -1.55, which becomes 10-1.55 = 0.0281838293. This means the FMR is 1 in 35 (0.0285714286) in sensetime_005. I will apply this same concept in finding the FPMR for the seven versions. I will only be doing these for the individuals who share at least one group in common (either sex, ethnicity, or gender).

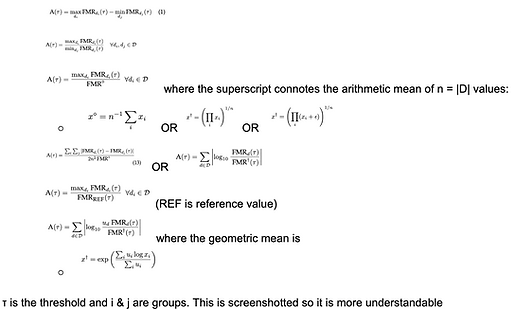

Once I have collected this information into seven respective data tables, I will begin the data analysis for each version. Per version, I will be calculating FPMR (false match rates) equity measures through a variety of formulas. The FPMR I consider are false matches between individuals who are both in the same group. This means that we do not consider FPMRs that have different groups for ethnicity, sex, and age. The list of equations that I will be using to calculate FPMRs are attached below.

After finding the different FPMRs per version, I will be analyzing the nine different FPMR equity measures in chronological order. I will be identifying which version had the most improvement in accuracy with respect to the time between that version and the previous one. I will also be checking to see if the proportion of increase in accuracy is the same for the different kinds of equations. I also want to see which of the demographic groups had the most increase in accuracy, least increase in accuracy, etc.

Media

This is an example of the data collected per version.

This is the list of equations I will be using to find the false positive match rate (FPMR).

References

Grother, Patrick, et al. Face Recognition Vendor Test Part 3: National Institute of Standards and Technology, Dec. 2019. Crossref, doi:10.6028/nist.ir.8280.

Duewer, David L. Face Recognition Vendor Test (FRVT) Part 8: National Institute of Standards and Technology, 2022. Crossref, doi:10.6028/nist.ir.8429.

De Freitas Pereira, Tiago, and Sébastien Marcel. “Fairness in Biometrics: A Figure of Merit to Assess Biometric Verification Systems.” IEEE Transactions on Biometrics, Behavior, and Identity Science, vol. 19–29, no. 1, Institute of Electrical and Electronics Engineers, 1 Jan. 2022, doi:10.1109/tbiom.2021.3102862.